Graphical user interfaces are everywhere. Even users who sing the praises of the “terminal lifestyle” run a graphical desktop environment, or at the very least a window manager. How else would you be reading this blog right now, if it weren’t for your graphical web browser?

If you use Linux (or any similar UNIX-like OS), you have most likely heard names such as X11, X.Org or Wayland at some point, without knowing much about their roles, let alone their differences. You can probably figure out that they all have something to do with displaying windows on a screen, but that’s usually as far as the guess goes – it would be fair to assume most people don’t need nor want to know more than that to be able to use their computers.

However, there have been many interesting developments in the domain of display protocols in recent years, particularly with the rise of the Wayland protocol.

This article is a short introduction to the current situation in the world of display protocols, starting with a bit of historical context, before detailing the evolution of X – the most widely used display protocol, and briefly introducing Wayland – which aims to be its successor.

In the beginning…

It’s not long after the release of the first GUI-capable computers in the 1970s and 1980s (most notably with the Xerox Alto in 1973 and Apple Lisa in 1983) that graphical environments started appearing in the UNIX world.

One of the first notable GUIs for UNIX systems was developed in a partnership between IBM and the Carnegie Mellon University in 1983, with what’s known as the Andrew Project (which you might know for introducing the Andrew File System, or AFS). The Andrew Project was a computing environment that powered all computers on the university’s campus, displaying graphical windows via a tiling window system: the Andrew Window Manager (AWM).

The Andrew Window Manager, 1986

Source: Data Structures in the Andrew Text Editor, Wilfred J. Hansen

Though AWM was portable and fast, licensing issues between IBM and the Carnegie Mellon University prevented it from being widely distributed and from rising in popularity.

At the same time, the Massachusetts Institute of Technology (MIT) was working on its own campus-wide IT environment, under the name Project Athena. With the Andrew Window Manager restricted from public use, they started working on the development of their own window system: the X Window System.

What is a Window System?

Before continuing, it is essential to have a definition of what a “window system” actually is. James Gosling, original designer of the Andrew Window Manager, defines window systems in a 2002 short article titled “Window System Design: If I had it to do over again in 2002” as follows:

The term “window system” is somewhat loose. It generally refers to the mechanism by which applications share access to the screen(s), keyboard and mouse. Beyond this it generally contains facilities for inter-application messages such as support for cut-and-paste, and drag-and-drop. It also often contains support for the decorations surrounding windows that provide the user interface for resizing, opening and closing windows; although in some systems this has been left up to the application. Sometimes the window system provides higher level abstractions like menus.

With this definition out of the way, we can shift our attention to the main course of this story: the X Window System.

The X Window System

Following its first release by the MIT in 1984, the X Window System (or X, for short) quickly became the most popular choice for third-parties looking for a windowing system, thanks to its ability to run on a wide range of hardware and to its active development.

X has been the standard for UNIX display protocols for almost 30 years, but is ever so slowly starting to fade out. Let’s dive deeper into what made X’s success, and its limitations in the modern day.

X, X11, X.Org…

Let us start with a bit of context regarding the jungle of X nomenclature.

The name “X” comes from the window system it was initially based on: the W Window System. This project was born at Stanford University and was named “W” after the operating system it was designed for: the V operating system. The teams at the MIT based their initial work on the source code of W, and thus decided to name the project “X”, as the successor to W.

The development of X was led by the MIT until the release of X11 in 1987, at which point the success of the project was such that the university wished to give stewardship of the project to a third-party. The development of X was subsequently led by several entities: the X Consortium, the Open Group, XFree86 and lastly, the X.Org Foundation (since 2004).

To this day, the development of the main implementation of X is led by the X.Org Foundation, and X11 is the latest version of the core protocol, which is why you will often see the terms “X”, “X11” and “X.Org” used interchangeably.

The X network protocol

X is a network-based window system: it defines a network protocol that enables users to display applications on their computer monitor by communicating with a remote machine.

While this concept is virtually obsolete nowadays, it was essential in the 1980s when X was first released – back then, basic workstations (“thin clients”) were too weak for general purpose computing, so they would connect to a more powerful mainframe over a local network, which would do the heavy lifting for them and return what needed to be displayed. This client-server communication was initially found in command-line environments, but was also relevant for displaying GUIs.

The often confusing part with X is that the server is actually the process that’s running on the user’s workstation, and the clients are individual applications (e.g. e-mail clients, terminal emulators, etc.) that can run on a remote mainframe.

While the X server is capable of doing primitive window management on its own, the first launched client is usually a “window manager”, which will take care of nicely laying out all subsequent windows on-screen for the user to interact with.

Twm, the standard X window manager

Source: Wikimedia

In X’s client-server model, mouse and keyboard input from the user’s machine goes through the kernel, is then captured by the X server in an event queue, which will in turn be sent to the relevant client. The client, upon catching the event, updates the internal state of the application, and sends a request back to the server, asking it to reflect that state graphically on the user’s screen (e.g. text written to a text field, a checkbox ticked, etc.).

After a couple of years though, processing power became increasingly more plentiful, which made it so the X server and its clients were all able to run on a single machine. The problem that remained in that case was the overhead latency incurred by running complex client-server connections over a TCP protocol, only to achieve interprocess communication within a single machine.

This change in paradigm meant that X needed to evolve and adapt, but how?

X protocol extensions

As time went on, both the hardware and use-cases for computers evolved:

-

input devices would become more complicated than the simple keyboard and mouse combo supported by the core X11 protocol,

-

screens would need to be rotated and rescaled,

-

as mentioned above, latency inherent to the client-server model would need to be reduced.

X needed to be updated to tackle this kind of issues. Because of internal politics though, these updates were not brought by updating the core protocol to a new version, but by what we call “extensions” to the X11 protocol.

Examples of essential extensions to the core protocol include:

-

X Input, to support more input devices (e.g. mutli-touch devices, joysticks, etc.),

-

X Resize, Rotate and Reflect (or XRandR for short), a display management extension that includes features such as multi-monitor support, setting a monitor’s refresh-rate, orientation, etc.,

-

MIT-SHM, which enables X to use shared memory for communications between the X server and its clients in order to reduce latency,

-

…and about twenty more, most of which I don’t know anything about because that’s way too many. You can find them here under “X11 Protocol & Extensions” if you’re interested.

The trouble with X starts here. This, in practice, made it so essential features for modern use-cases had to be layered on top of the old, obsolete X11 base from 1987, creating unnecessary ancient bloat that modern X server implementations still carry to this day in order to comply with the core X11 protocol.

Network transparency

One of X’s initial goals was to be a “network transparent” system. A network transparent system is one where the clients and the server communicate seamlessly over the network, without requiring that both of them run on the same architecture/operating system. This design would enable a user to use a full X environment over the network, which was especially important back in the 1980s.

While the core X11 protocol is indeed network transparent, many extensions that have become essential for modern use have broken this concept. Besides the extensions that assume that the clients and the X server run on the same machine, there are use-cases today that simply did not exist back in the day. One example of this is… web browsing. X was obviously not initially designed to be able to transport high-resolution snapshots of a web page through the network, all while maintaining low latency and a steady framerate.

This means that one of the main reasons why X was a network protocol in the first place is now practically obsolete.

Compositors

Back when X was first released, computers shipped with only a few megabytes of RAM. For that reason, it was impossible to store the current state of the display in memory before showing it on screen – the only state was stored directly in the frame buffer and hence, reflected directly on the screen. This meant that clients were tasked with redrawing parts of their window as they were moved around on the user’s screen and displayed by the X server. This was highly unefficient, a burden for application developers, and caused flickering on-screen when moving windows around if applications were too slow to redraw (which they often were).

RAM capacity was rapidly increasing at the turn of the century, and a new extension to the protocol, Composite, was released in 2004 with the goal of solving this buffering problem.

Composite enables every pixel of every window to be buffered separately in RAM, and makes use of a process called the compositor to assemble all of these buffers together before tasking the X server with displaying the final assemblage on screen. With compositing being so closely related to managing windows, there are many window managers that are also compositors (or “compositing window managers”). So much so in fact, that these window managers are often simply called compositors.

The X server: a redundant middle man

Nowadays, compositors are practically ubiquitous, and window management duty (among other complicated tasks, such as font rendering) has been completely taken away from the X server. At that point, there is not much left for it to do.

The only thing the X server does now is handle user input, take drawing requests from X clients, and ask the compositor to render that request as it sees fit.

The compositor does just about everything, and yet it still has to chat back and forth with the X server, because it’s the only process that can access the graphics hardware in order to display the result on screen!

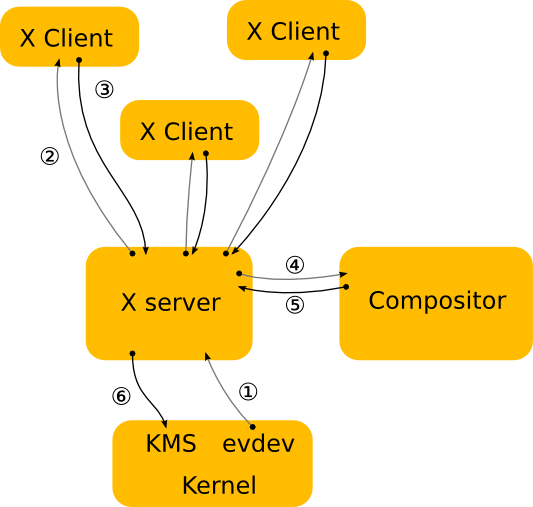

This flowchart outlines the path from user input (1) to screen output (6) when using X.

Note: evdev and KMS are components of the Linux kernel. evdev is the interface that handles peripheral input, and KMS is the component that manages display mode setting.

Source: Wayland Documentation

This mix of unused legacy features, verbosity and redundancy in X prompted new development efforts on display protocols, with one of the most important projects being known as Wayland.

Wayland

The inception of Wayland

This is the story of a Danish developer named Kristian Høgsberg.

In 2008, Høgsberg was a developer at Red Hat, where he mainly worked on X development. One of his most notable achievements there was the development of the DRI2 (Direct Rendering Infrastructure) protocol extension, an extension that enables X clients to directly access the GPU without having to go back and forth through the X server.

Annoyed by X’s overly complex and outdated design, Høgsberg set out to create a new, simpler display protocol where there is no middle man between the clients and the compositor. This is the start of the Wayland project.

The Wayland protocol

Wayland is a display protocol similar to X, where a server handles user input (from the kernel) and graphical output (to the kernel), and where clients communicate with the server to update the content of windows on the screen.

The main difference between the two is that in Wayland, the roles of the server, of the window manager, and of the compositor are all merged into one process. This process is called the Wayland compositor (or sometimes the Wayland server).

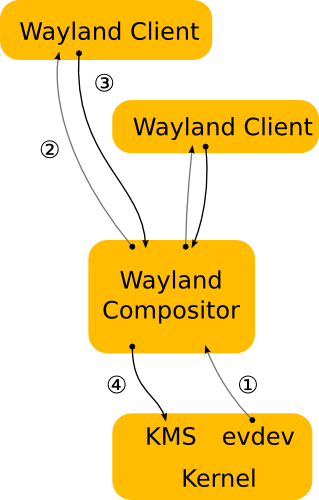

This flowchart outlines the path from user input (1) to screen output (4) when using Wayland.

Source: Wayland Documentation

In this model, communication between the clients and the compositor is greatly simplified. Wayland clients make use of direct rendering methods similar to that of DRI2, and simply inform the compositor of the region on screen that was updated. The compositor assembles the frame, and then directly communicates with the kernel to refresh the screen.

Xwayland

While all this is amazing, there is one catch. If nobody develops for Wayland, nobody will ever use Wayland! For that reason, Wayland includes an X11 compatibility tool called Xwayland, which enables X11 applications to seamlessly run inside a Wayland environment.

Xwayland is an X11 server that runs alongside Wayland clients, with the only difference with a native X11 server being that it is modified to communicate with the Wayland compositor instead of the kernel for user input and graphical output.

Wayland implementations

So, anyone can start using Wayland now, right? Yes!

GNOME and KDE have created Wayland versions of their compositors, which are still in active development but are almost feature-complete. Actually, if you use GNOME on a recent distribution of Linux (e.g. Fedora, Arch), chances are you are already running on Wayland and don’t even know it.

However, where there is a plethora of window managers to choose from in the X ecosystem, the difficulty of developing a Wayland compositor reduces choice considerably.

A Wayland compositor needs to implement many mechanisms other than window management: interaction with the kernel for input and output, management of Xwayland, etc. And while the GNOME and KDE teams have allocated the development resources needed to build a Wayland compositor from scratch, the process is just too tedious for a developer that wants to focus on the “window management” part of a compositor.

To ease the development process, a set of modules that can serve as building blocks for developing Wayland compositors was created under the name wlroots:

wlroots implements a huge variety of Wayland compositor features and implements them right, so you can focus on the features that make your compositor unique.

The most popular wlroots-based compositor is Sway, a tiling compositor that aims at replicating the user experience of the i3 window manager under Wayland.

Feature (im)parity with X

The last topic to discuss is feature parity with X. While Wayland has come to be almost feature complete compared to X, there are still some points where it is lacking, the most obvious one being fractional scaling.

Fractional scaling allows the window system to scale the windows up for easier viewing on high pixel density monitors. Historically, this was done on Wayland by first scaling the windows up by integer factors (2x, 3x, etc.), then scaling them down to the desired size. This would result in degraded performance and blurry content.

But a lot of progress has been made in that regard recently, including the merging of the wp-fractional-scale-v1 Wayland protocol, which enables true fractional scaling as supported by X.

There are even new use-cases today where Wayland is leading the charge: HDR support has been in the works for some time and has been making steady progress recently. In contrast, development activity over on the X.org side has fallen to its lowest point in the last 20 years, and it is unclear whether such features will ever see the light of day.

In the end…

This article was an opportunity for me to learn more about the X and Wayland protocols, and I hope it was also a pleasant read for you.

X11 has been around for decades, and is the host to a variety of great projects, particularly in the space of window managers. It is unclear whether the standalone X.org server will keep a place in the UNIX desktop in the years to come, but there is one thing that’s clear: because of its complexity in design, X is not the way forward anymore, and Wayland will most definitely become the standard in the future. The only question that remains is: how far away is this future?

In any case, I suggest you give Wayland a fair shot and forge an opinion for yourself!